Premium

12 Oct 2020

Get Unreal

Subscribe to CX E-News

There is a lot of buzz in the film and television industries around the use of LED screens for backgrounds rather than green screens. That in itself is not big news. What is big news is the use of the video gaming technology to feed interactive, real time content to the LED screens.

The video game industry is massive, with an income of approximately $200 billion in 2019. As a result, it drives the development of powerful computing technologies, especially commodity graphics hardware and software which other industries take advantage of.

For example, the Davinci Resolve video editor became a mainstream product because of the ready availability of high-end graphics cards developed for video games. Before that, it was a $100,000 product running on dedicated hardware.

In 1998, Epic Games (the makers of Gears of War and Fortnite), developed the Unreal real time game engine for their first-person shooter game, also called Unreal.

In 2014 they made the engine available for others by way of subscription model and in 2015, in a bold move, they made it available as a free download with their Creator Licence or their royalty-based licence.

Epic’s logic was that if they made the engine easily available, the community would grow.

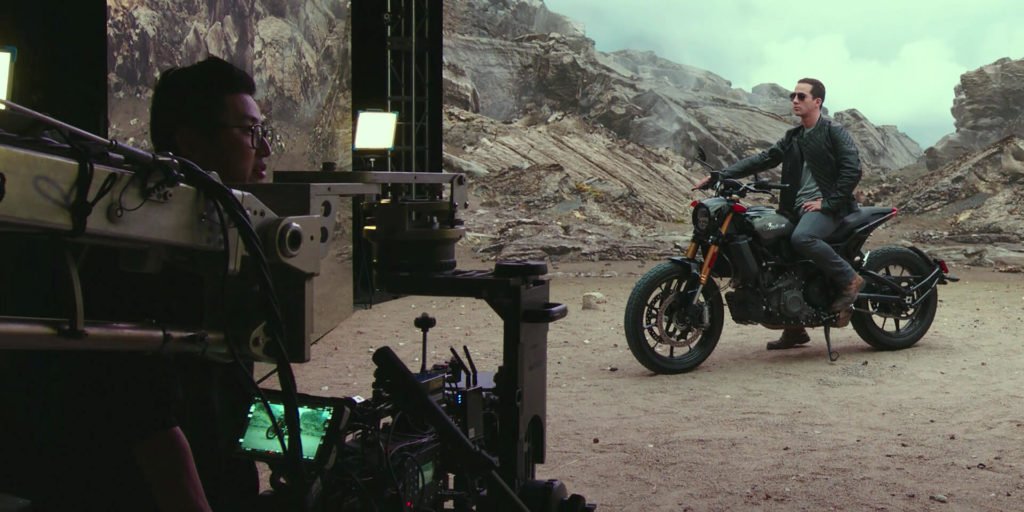

For production of the first series of ‘The Mandalorian’, Industrial Light and Magic (ILM) took the Unreal Engine and built a 360°, six metre high LED wall and topped it off with a LED roof to make a completely enclosed LED environment.

The screens were driven by the Unreal Engine running on four PCs. This system allowed dynamic photo-realistic digital landscapes and sets to be live while filming, which dramatically reduced the need for green screen and produces the closest thing to a working “Holodeck” style of technology.

An interactive and infinitely variable set! Amazingly, this approach saved them money when compared to traditional green screen methods and the required post-production to make the green screen footage work.

Half the Mandalorian footage was shot in the Unreal Engine/LED screen environment.

But why real time technology? By putting a real-time three-dimensional tracker on the camera, the set can interact with it. This means the parallax of a scene can be perfect as the camera moves throughout a scene.

For example, think of being in a car that is driving through a forest. When you look out the window, the trees near the car zoom past, while the trees in the distance appear to slowly drift by. That is parallax in action, and for a scene to look natural as a camera moves, it also needs to have the elements in the distance move less relative to those up close.

There is another benefit. Because Unreal is real-time rendering, you could add controllers to the car’s accelerator, brake pedal and steering wheel.

Now an actor in the role of driver has total control of the scene. He swerves left, so does the scene, he brakes sharply, the movement stops in perfect sync. This interactivity drives a better performance from the actors because the scene responds to the actor’s actions and they no longer have to imagine what they are working with as they had to with green screens.

Also, because the camera has a tracker, it can move around within the scene and maintain perfect parallax viewing throughout with the interactive action.

The fact that it is real-time opens up other opportunities. Perhaps the director wants less trees in the forest. Easily done. Maybe rain or fog would suit the scene. Also easily added then and there. Time of day wrong? Change it. Not liking the shadows? Fix them.

As you would imagine, the LED screens emit light and that light is from the scene itself. Therefore, the colour and brightness of the light is consistent with the scene being shot.

Imagine our car drives into a tunnel. As it enters the tunnel from the bright outside into the darker artificially lit tunnel, the change in light down the side of the car as it enters mimics perfectly what happens in real life.

The moving reflections on the car’s windscreen, chrome and paintwork is also realistic. You cannot do this with green screen.

Unreal Engine does all of this… and more.

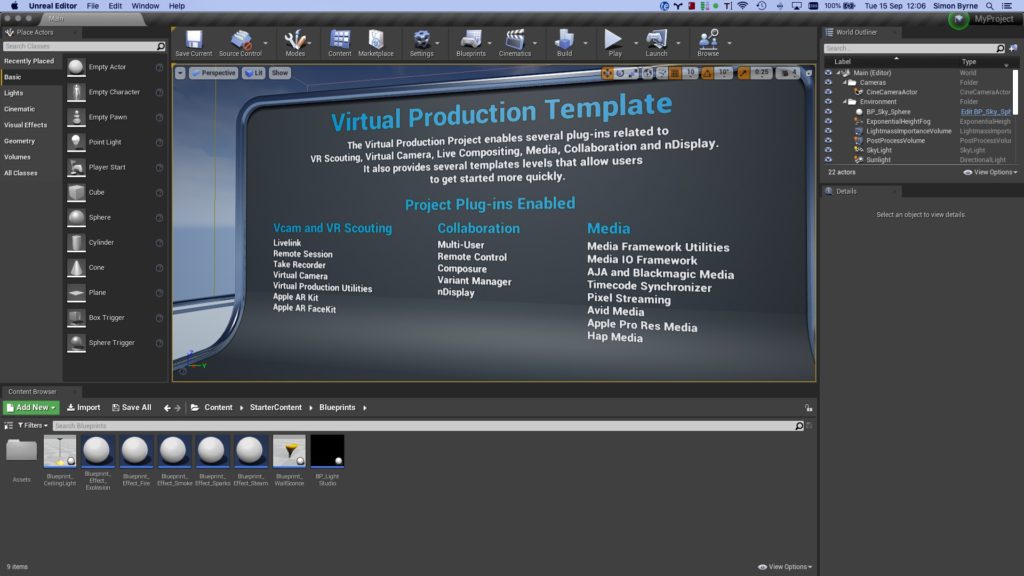

It is a state-of-the-art 3D editor and real time engine that produces photorealistic rendering, dynamic physics and effects, lifelike animation and data translation.

It supports real environment scanning with a LiDAR Point Cloud plugin. You can laser scan a building and import the 3D data into the Unreal Engine.

That means you can import real life environments in glorious 3D.

Built on C++, the Unreal Editor, which is the software you use to create content, is available for Windows, Mac and Linux, but Windows seems to be preferred.

It requires a powerful computer, something along the lines of a high-end gaming machine with a good graphics processing card. With the Epic Game’s Creator licence, it is free to download and use and is 100% royalty free under that licence.

For producers where income is directly produced from a product that uses the engine, Epic Games charge a royalty.

The Unreal engine natively supports Blackmagic Designs and Aja IO hardware as well as support for NDI by way of a plugin. In terms of control, it has integration for linear timecode, MIDI, DMX, web and iPad control as well as 3D tracking from external devices.

One such tracking device is the HTC Vive, which is a game oriented 3D tracker at only about $260. Entry level filmakers are mounting those to their cameras and getting great results.

The DMX plugin is particularly interesting because as well as controlling Unreal Engine from a lighting desk, Unreal Engine can output sophisticated DMX which enables real time rendering of lighting control.

So when our car drives into the tunnel, Unreal Engine changes the lighting to suit the new scene.

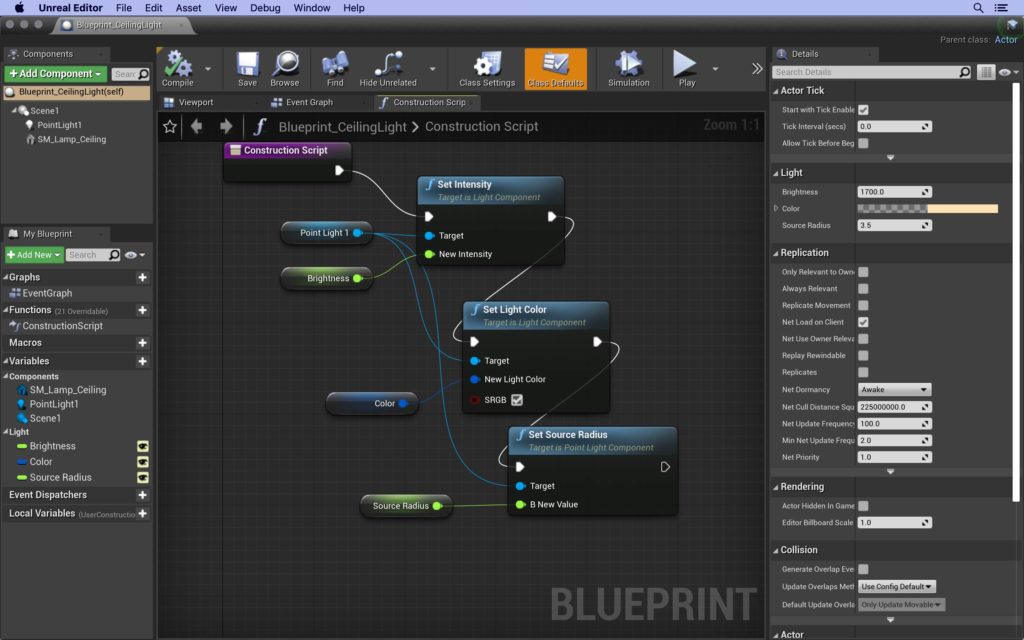

The scripting system within Unreal Engine are called Blueprints. Blueprints are a visual scripting system and is a fast way to start prototyping. Instead of having to write code line by line, you do everything visually.

Drag and drop nodes, set their properties in a UI, and drag wires to connect.

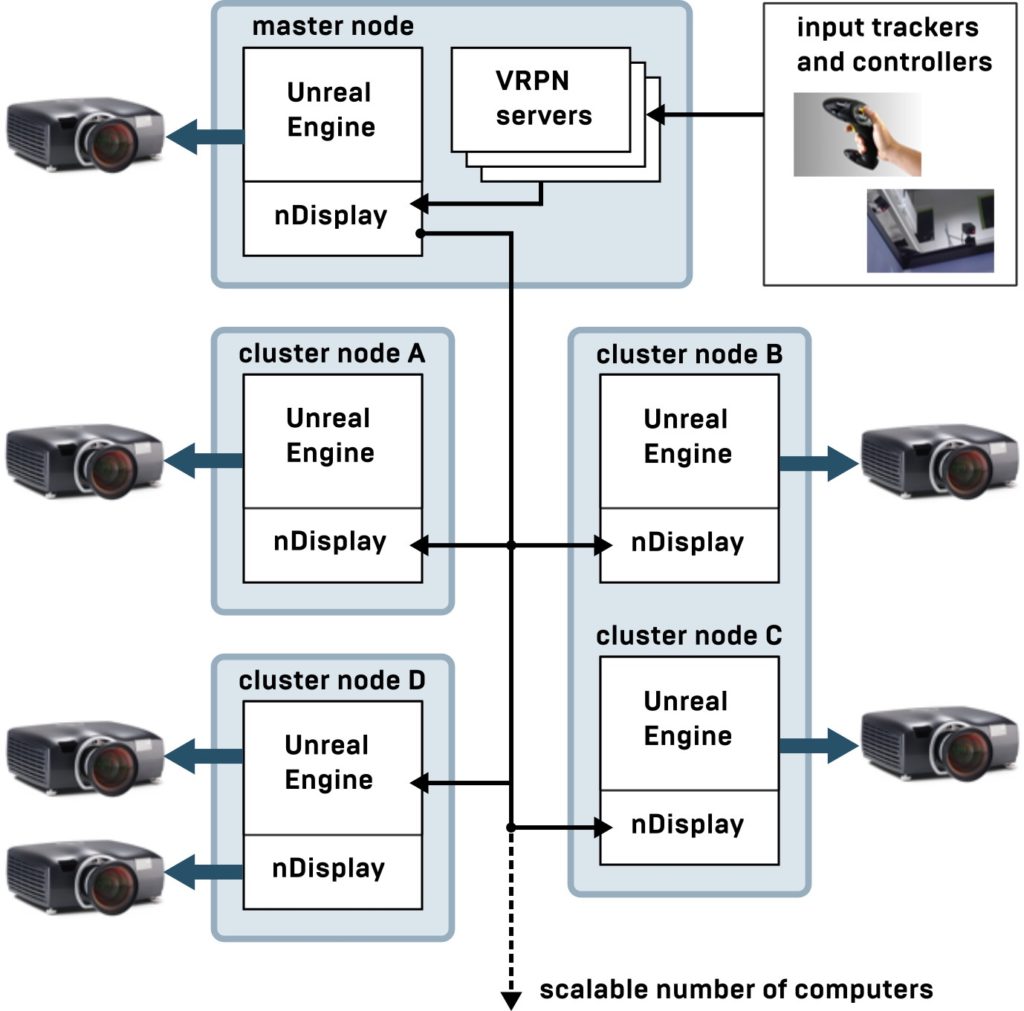

As you’d expect, Unreal Engine also does projection edge blending and LED screen mapping using its own multiscreen technology, ndisplay. ndisplay allows a master PC, and multiple slave PCs which are networked using standard Ethernet gigabit networking.

The images are kept in frame-accurate sync using a separate genlock source fed to every computer in the network.

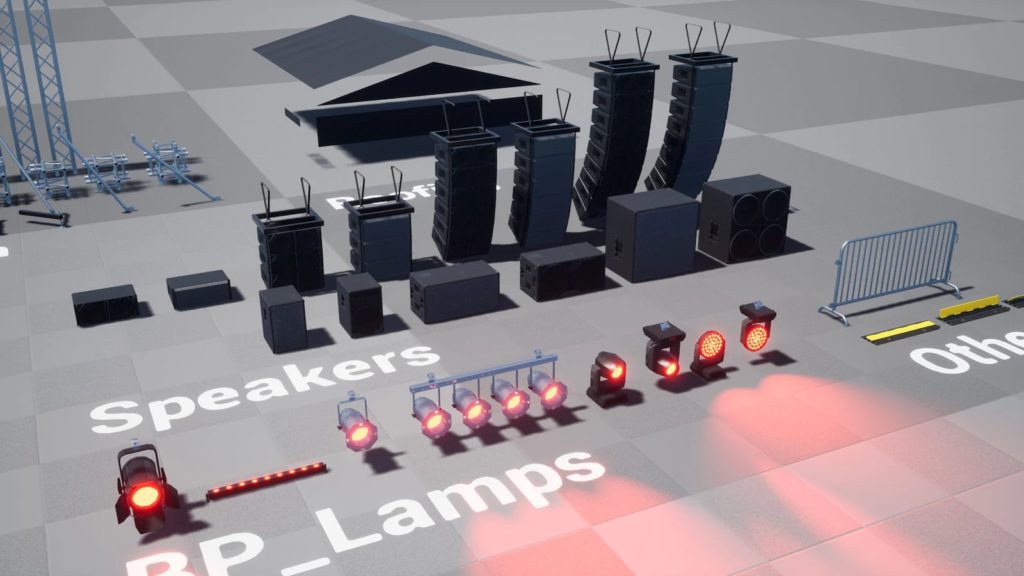

Unreal Engine is supported by a very healthy community and marketplace. In the marketplace, there are scenes, textures, blueprints and plugins for the engine that others have developed which can be purchased, but many are also free.

For example, there is a plugin that connects a Panasonic AW-UE150 PTZ camera to the engine. The plugin calculates the PTZ camera position as well as the pan, tilt, zoom, and focus parameters from the camera and applies those settings to your project.

If the camera moves, the engine reacts accordingly. In the community forums, there are literally thousands of topics being discussed. If you are stuck, you will quickly find a solution and if you cannot, there are thousands of other community users ready to help out.

In terms of training, the people at Epic Games have an extensive library of online courses which are also free. I was impressed with these. They are properly structured, well thought through courses complete with good instructors and support materials.

In these COVID-19 times, if you own some LED screens, virtual sets driven by the Unreal Engine might be a new market for you. If you are a tech with some free time, the market for Unreal Engine skills is definitely a growing industry which you may want to get into.

I’m glad Epic Games make obscene amounts of money in the video game industry. It means industries like ours get to play with their real time virtual 3D worlds at extremely low cost.

Worth checking out.

Download the Unreal Engine

unrealengine.com/en-US/get-now

Epic’s Unreal Engine online courses

unrealengine.com/en-US/onlinelearning-courses

The Virtual Production of The Mandalorian using Unreal Engine

youtu.be/gUnxzVOs3rk

Also from CX October:

LED Walls: A Film Production Revolution by Simon Barnett, CX Magazine October 2020 p.32.

Why LED screens are replacing green screens by Nathan Wright

Related reading from Simon Byrne also on the CX Network:

Free Video Editing Davinci Resolve

https://www.cxnetwork.com.au/free-video-editing-davinci-resolve

Formats Containers and Codecs

https://www.cxnetwork.com.au/video-file-formats-containers-and-codecs

Professional Video Over IP

https://www.cxnetwork.com.au/professional-video-over-ip

CX Magazine – October 2020

LIGHTING | AUDIO | VIDEO | STAGING | INTEGRATION

Entertainment technology news and issues for Australia and New Zealand

– in print and free online www.cxnetwork.com.au

© VCS Creative Publishing

Subscribe

Published monthly since 1991, our famous AV industry magazine is free for download or pay for print. Subscribers also receive CX News, our free weekly email with the latest industry news and jobs.