Theatre

4 Aug 2022

The Picture of Dorian Gray

Subscribe to CX E-News

The tech of the world-leading, game-changing production

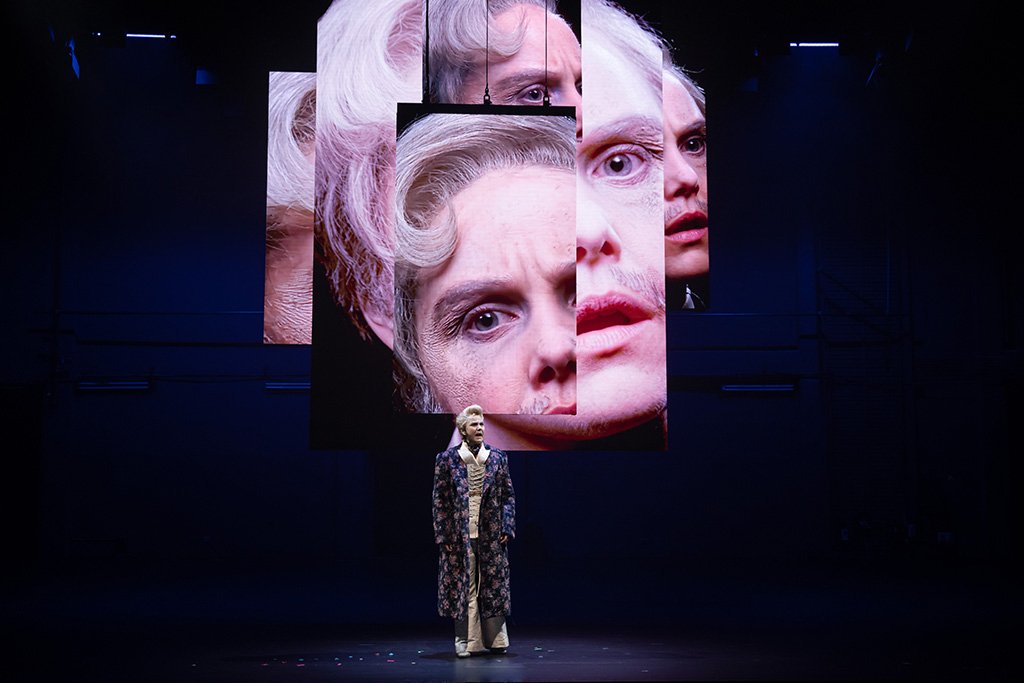

Sydney Theatre Company’s The Picture of Dorian Gray, presented by Michael Cassel Group, has completely redefined what is possible on the stage. With Eryn Jean Norvill playing all 26 roles, a dizzying combination of ultra-precise live camera work, motorised LED screens, totally novel staging, live image manipulation, and unmitigated technical audacity combine to create a piece that will change theatre practice globally.

Every rule and limitation of theatre is shattered and pulverised before being reshaped into something completely new. Sets are positioned upstage facing away from the audience. Millimetre-precise blocking on the part of both the star and the crew see the performer believably interacting with up to seven other versions of herself on multiple moving LED screens, even handing herself props thanks to some incredible Assistant Stage Managers.

Every new section of the show introduces another technique that no stage has ever seen before. Every night of the show runs in both Sydney and Melbourne have erupted into a standing ovation. I was ready to give it a standing ovation after 20 minutes. This production is an artistic and technical masterpiece and was completely created in Sydney by Australians. It will conquer the world.

Live Video Pioneers

Celebrated director Kip Williams adapted the text of Oscar Wilde’s 1890 novella for this production, cannily understanding that the themes of vanity and self-obsession translated perfectly to the modern visual language of screens, Instagram, and Snapchat. Kip has made extensive use of live video throughout his career and intended Dorian Gray to be a tour-de-force in the use of the technique.

Video Designer David Bergman had worked with Kip and live cameras previously, most notably on Sydney Theatre Company’s A Cheery Soul in 2018. “With Dorian Gray, we deliberately set out to make the most exciting and technologically sophisticated show that any of us had ever worked on,” says David. “We were working off the back of smaller productions that had used similar tech. Kip had done a lot of shows using live cameras, but none of us had attempted anything this complicated before. The initial creative conversations were about finding the most exciting things we could think of from an audience point of view, and incorporating ideas from films we love.”

The show begins with the actor addressing the audience with one LED screen hanging centre in portrait mode. As the show goes on, more LED screens are introduced and fly, track, move, combine, break apart, and interact. “All of the video techniques and movement lead the audience through a visual journey of how the ‘language of the cameras’ works,” explains David. “And we constantly change the rules through the show. It keeps it exciting for the audience and keeps the crew on our toes. We can never rest, and the next chapter is always the next step up.”

The Choreography of the Cameras

Three wired cameras on tripods and two wireless cameras on Steadicam rigs are crewed by operators drawn from film and television. Without spoiling the show for those who have not seen it, they are performers as much as the star. The demands on them are precise in the extreme. I have never seen so many performers hit so many marks so accurately for that long.

“We call it the ‘choreography of the cameras’,” illustrates David. “They constantly have to re-shift and reimagine their aspect ratio, framing Eryn up in the right spot in the right frame, every time. They’re constantly thinking of how the frame is used; sometimes square, sometimes extreme vertical.”

Video Supervisor Michael Hedges is in charge of the video crew and the tech they employ. A demanding two hours with no interval, the show is an extreme feat for everyone on stage. “We all work with the camera operators and make sure they’re in good health and good spirits,” says Michael. “With some of them coming from a totally different working environment in film, we have helped them adjust to theatre schedules and calls, and they in turn have trained some theatre personnel, assistant directors and the like, to become Steadicam operators.”

Dorian Gray may only have one official cast member, but each show includes 18 crew performing. Stage management, cameras, lighting, sound, radio techs, and mechanists; at curtain, everyone takes a bow. The sheer precision of every moving part means they’ve earned it. “When interacting with the pre-recorded video elements, repeatability is the hardest,” confirms Michael. “There are two shots that have to be millimetre perfect. Rehearsing these, we locked off the shot, noted the degrees, and spiked the position. Getting it right every show isn’t magic, just a whole lot of theatre craft. It’s hardcore precision, and the camera team have to get it right night after night.”

The Secret Weapon – disguise

At the heart of the video system, a disguise 4x4pro media server runs the show. Its capabilities are not only essential to the smooth running of the production but were necessary for its creation.

“This is my first show using disguise,” relates David. “It’s the secret to our success in terms how we managed some of these ridiculous sequences. We absolutely relied on its previsualisation tools. A lot of the creative process started in Lockdown #1, 2020, in Sydney. From home, I was able to build a rendered 3D model of all the screens, and run content on them. We’d Zoom with the creative team, have discussions about what screen went where, and which configuration would work with each chapter.”

During rehearsals, the video team ran disguise in the room. Live cameras fed into disguise’s previsualisation engine, sending the real video into the simulated screens. The output was fed to a monitor, and Kip Williams got to see the show from the audience’s point of view. Switching between pre-vis presets for points-of-view throughout the auditorium enabled the video crew to create pre-recorded video that worked with the audience’s sightlines, creating the realistic illusion of multiple characters sharing the stage.

Video Land

The three main wired cameras are Sony broadcast models owned by Sydney Theatre Company, which were purchased for other productions, as were the two Steadicam cameras. “We developed our own hybrid Steadicam rigs for this show to make it light,” says David. “A traditional Steadicam set-up on a film shoot is only worn for 10 or 15 mins because it’s so heavy. We’re using a DJI Ronin gimbal on a Steadicam arm, which took a couple of kg off the weight, making the whole rig 9.5 kg. We have multiple operators and stagger them on the rigs through the show.”

The wired cameras output SDI to a BlackMagic Design Smart Videohub 20×20 switcher. The two wireless cameras transmit using a Teradek Bolt 4K 750. From the switcher, four out of the five camera feeds go into disguise, which is running all of the pre-recorded video content and mapping to the screens. An ETC Ion desk, purely for video control, triggers all cues in disguise via sACN. Video Land in Melbourne’s Playhouse is below the stage. There are two operators for video, one on the Ion, controlling disguise, the switcher, network monitoring, and a computer system for effects (more on that later), and the other operator controlling focus, iris, and zoom on the cameras via remote control.

The remote camera control is a mixture of cabled serial control sent to iris and focus, and a ‘homebrew’ solution for zoom. “If you go down into Video Land, you’d think the camera op is flying a couple of model planes,” jokes Michael. “It’s a radio controller, which goes to an on-camera RC motor to control the zoom.”

Simple Motion

The flying LED screens are made of ROE Visual Onyx 3.5mm pitch panels supplied by Technical Direction Company. The automated motor system that flies them around with breath-taking accuracy was custom-built for the STC by Sydney automation company Simple Motion.

“disguise is receiving position data from the automation system, which is used to map the content,” continues Michael. “Each screen is on its own fixed truss, with a built-in hoist to fly them in, out, and track. The system tours with the show, so it doesn’t need much reprogramming when you come into a new venue. Simple Motion have also developed an anti-swing algorithm running in the background which means the screens can move sideways extremely quickly without swinging. It’s a system developed from container shipping technology, where they lift huge weights on long cranes. The system knows how much weight it’s carrying and how long the cable is, so it knows when to slow down at what exact ratio to cancel out any swing. Its full speed is pretty exciting – it can traverse two metres per second and stop on a dime! We never use it anywhere near that.”

Wi-Fi and Smartphones

Perhaps the most terrifying technical moments of the show are when the entire production hinges on live video being sent from an iPhone held by Eryn Jean Norvill, who uses its camera and live face manipulation to play multiple characters in real time. There are whole sections reliant on a consumer electronic device on Wi-Fi. What could go wrong?

“We learnt a lot of lessons from an STC production of Julius Caesar, which had actors using nine iPhones, in the round,” divulges Michael. “For instance, you can’t have two iPhones streaming beside each other within half a metre or they take turns sending frames. It was trial and error to get every little bit of performance out of the system. We’re now using a Unifi Wi-Fi 6 network with long-range access points. Its transmitters managed to cut through the RF noise. We have two access points, one upstage and one downstage. Line of sight to the access point is vital. We’ve tested for RF interference from the LED screens, from dimming lights, and for how much metal can we work around. One of the things we found is that the longer the phone stands still, the better the connection. We block to that, and work with it.”

Later in the show Eryn is swinging and diving between phones with only moments in between, and there’s a bit of necessary theatrical deceit going on here. “When we tried live iPhone in-app image manipulation in other shows, we found it’s too fiddly for the actors to do while performing,” confides Michael. “The iPhone outputs NDI video, which goes into three Mac Minis. They rotate the vision, and run it through Snap Camera, the desktop version of Snapchat. That applies face filters, and the video goes out via NDI to disguise. The section where all the face filters are applied is sequenced. The Macs receive MIDI commands via disguise which are translated into keypresses.”

Take a Bow

“Dorian Gray is a tour-de-force of everyone stepping up and giving their all,” attests Michael. “You have to trust the vision. Most of the people on stage haven’t seen the show and can’t really envision what it actually feels like to watch it; they just see the looks on people’s faces when they get a standing ovation every night. Until someone asks you to reach for perfection, you don’t know just how high you can reach. Most of the time, near enough is good enough, but this is a show where it isn’t. You have to hit the mark, and if you can’t, we have to figure out how.”

At the time of their interviews, both David and Michael were already working on a new production with the same creative team; an adaptation of Robert Louis Stevenson’s The Strange Case of Dr Jekyll and Mr Hyde. They’re taking ideas and techniques learnt from Dorian Gray, and upgrading the tech. “We’re using a commercially available, professional system for camera control on Jekyll and Hyde,” teases Michael. “We’re now using Tilta Nucleus M with motors and digital read-outs for precision control. We’ve also developed custom electronics to wirelessly control the iris.”

Subscribe

Published monthly since 1991, our famous AV industry magazine is free for download or pay for print. Subscribers also receive CX News, our free weekly email with the latest industry news and jobs.